Introduction

- Why AI content is booming—GPT-n, generative visuals, deepfakes.

- Opportunities: creative assistance, efficiencies.

- Risks: disinformation, fraud, erosion of trust.

- Purpose of article: Practical insights for readers.

1. What Counts as “AI‑Generated Content”?

- Definitions: Text (ChatGPT), Images (DALL·E, Midjourney), Audio (Clones), Video (Deepfakes).

- Real‑world examples: AI news articles, deepfake politicians.

- How detection tools attempt to flag them.

2. How It’s Made: The Tech Behind the Curtain

- Neural networks and training data.

- Transformers for text; GANs for image/video.

- Prompt engineering—how users control the output.

- Voice and video synthesis pipelines.

3. Real Use Cases: The “Good” AI

- Journalism: AI drafts, fact-checking assistants.

- Marketing: personalized content at scale.

- Education: tutoring and accessibility tools.

- Film and arts: digital enhancements and stylistic experiments.

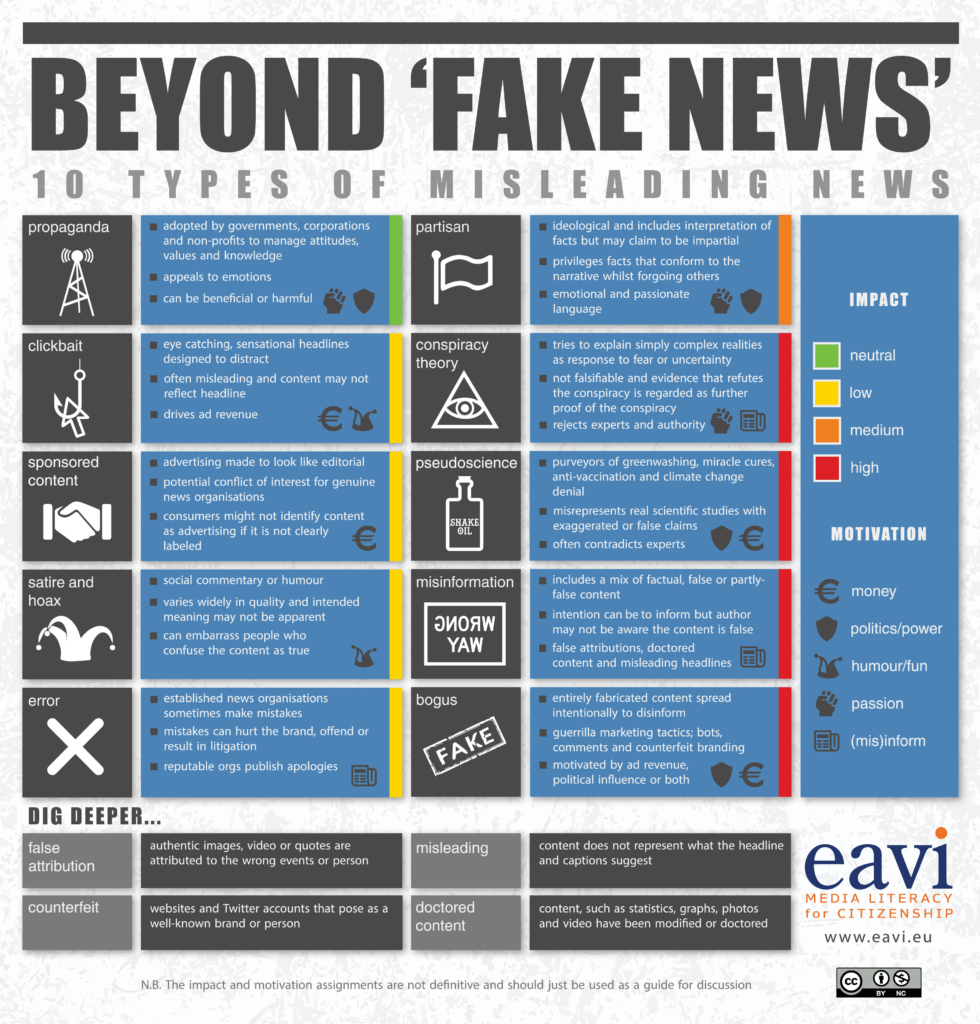

4. The Trouble with “Fake”: Risks & Real‑World Harms

- Misinformation and election interference.

- Reputation damage from AI-generated fake videos/audio.

- Legal and privacy issues—without consent, misrepresentation.

5. Spotting the Fakes: Tools & Tactics

- Technical indicators: artifact detection, metadata inconsistencies.

- Popular tools: Deepware, Sensity AI, GPT detectors.

- Human strategies: reverse image search, source validation.

- Image placement #5: UI screens of an AI-detection tool.

- Checklist for readers.

6. The Arms Race: Generators vs. Detectors

- Ongoing technological tug-of-war.

- Limitations like adversarial examples and “watermarking.”

- How ethics and regulation may shift the balance.

7. What You Can Do: Guidelines for Readers & Publishers

- For individuals: ask questions, be suspicious, use tools.

- For journalists: signal transparency, disclose when AI is used.

- Platform policies: watermarking, verification, labelling.

- Call to action: support literacy and responsible AI.

8. Future Outlook & Conclusion

- Emerging trends: audio synthesis, VR/AR fakes, AI watermarking.

- The future of truth in media.

- Re-emphasis: vigilance, tools, ethical use.

✅ Sample Section: “Spotting the Fakes”

Here’s a fleshed-out snippet (~200 words):

How to Detect AI‑Generated Text or Images in 4 Steps

- Analyze the Metadata

Check file metadata—AI tools often strip or tag with generative signatures.- Look for Artifacts/Anomalies

In images: odd shadows, mismatched fingers, weird teeth. In text: overly uniform tone, repetition, or logical quirks.- Reverse Search It

Use Google Images or TinEye on suspicious photos; use AI-detection tools like GPTZero or OpenAI’s classifier for text.- Check the Source

Trust content from verified or transparent publishers. If unsure, reach out and request originals or citations.

Tool Box:

- Sensity AI: flags deepfakes in video.

- Deepfake-o-meter: browser extension that warns you when on known AI-generated sites.

- Hugging Face GPT Detector: estimates if text was AI‑written.